by Sarang Nagmote

Category - Website Development

More Information & Updates Available at: http://insightanalytics.co.in

Lately I’ve been on the road, giving talks about web application security.JSON Web Tokens (JWTs) are the new hotness, and I’ve been trying to demystifythem and explain how they can be used securely. In the latest iteration of this talk, I give some love to Angular and explain how I’ve solved authentication issues in that framework.

However the guidelines in this talk are applicable to any front-end framework.

Following you will find a video recording of this talk, and a textual write-upof the content. I hope you will find it useful!

Status Quo: Session Identifiers

We’re familiar with the traditional way of doing authentication: a user presentsa username and password. In return, we create a session ID for them and westore this ID in a cookie. This ID is a pointer to the user in the database.

This seems pretty straightforward, so why are JWTs trying to replace thisscheme? Session identifiers have these general limitations:

- They’re opaque, and contain no meaning themselves. They’re just pointers.

- As such, they can be database heavy: you have to look up user information andpermission information on every request.

- If the cookies are not property secured, it is easy to steal (hijack) theuser’s session.

JWTs can give you some options, regarding the database performance issues, butthey are not more secure by default. The major attack vector for session IDsis cookie hijacking. We’re going to spend a lot of time on this issue, becauseJWTs have the same vulnerability.

Cookies, The Right Way ®

JWTs are not more secure by default. Just like session IDs, you need to storethem in the browser. The browser is a hostile environment, but cookies areactually the most secure location — if used properly!

To use cookies securely, you need to do the following:

- Only transmit cookies over HTTPS/TLS connections. Set the

Secureflag oncookies that you send to the browser, so that the browser never sends thecookie over non-secure connections. This is important, because a lot ofsystems have HTTP redirects in them and they don’t always redirect to theHTTPS version of the URL. This will leak the cookie. Stop this bypreventing it at the browser level with theSecureflag. - Protect yourself against Cross-Site-Scripting attacks (XSS). A well-craftedXSS attack can hijack the user’s cookies. The easiest way to preventXSS-based cookie hijacking is to set the

HttpOnlyflag on the cookies thatyou send to the browser. This will prevent those cookies from being read bythe JavaScript environment, making it impossible for an XSS attack to read thecookie values.You should also implement proper content escaping, to prevent all forms of XSSattacks. The following resources can point you in the right direction:

Protect Yourself from Cross-Site-Request-Forgery

Compromised websites can make arbitrary GET requests to your web application,and the browser will send along the cookies for your domain. Your servershould not implicitly trust a request, merely because it has session cookies.

You should implement Double Submit Cookies by setting an

xsrf-token cookieon login. All AJAX requests from your front-end application should append thevalue of this cookie as the X-XSRF-Token header. This will trigger the Same-Origin-Policy of the browser, and deny cross-domain request.As such, your server should reject any request that does not see a match betweenthe supplied

X-XSRF-Token header and xsrf-token cookie. The value of thecookie should be a highly random, un-guessable string.For more reading, please see:

Introducing JSON Web Tokens (JWTs)!

Whoo, new stuff! JWTs are a useful addition to your architecture. As we talkabout JWTs, the following terms are useful to define:

- Authentication is proving who you are.

- Authorization is being granted access to resources.

- Tokens are used to persist authentication and get authorization.

- JWT is a token format.

What’s in a JWT?

In the wild they look like just another ugly string:

eyJ0eXAiOiJKV1QiLA0KICJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJqb2UiLA0KICJleHAiOjEzMDA4MTkzODAsDQogImh0dHA6Ly9leGFtcGxlLmNvbS9pc19yb290Ijp0cnVlfQ.dBjftJeZ4CVPmB92K27uhbUJU1p1r_wW1gFWFOEjXk |

But they do have a three part structure. Each part is a Base64-URL encodedstring:

eyJ0eXAiOiJKV1QiLA0KICJhbGciOiJIUzI1NiJ9.eyJpc3MiOiJqb2UiLA0KICJleHAiOjEzMDA4MTkzODAsDQogImh0dHA6Ly9leGFtcGxlLmNvbS9pc19yb290Ijp0cnVlfQ.dBjftJeZ4CVPmB92K27uhbUJU1p1r_wW1gFWFOEjXk |

Base64-decode the parts to see the contents:

Header:

{ "typ":"JWT", "alg":"HS256"} |

Claims Body:

{ "iss”:”http://trustyapp.com/”, "exp": 1300819380, “sub”: ”users/8983462”, “scope”: “self api/buy”} |

Cryptographic Signature:

tß´—™à%O˜v+nî…SZu¯µ€U…8H× |

The Claims Body

The claims body is the best part! It asserts:

- Who issued the token (

iss). - When it expires (

exp). - Who it represents (

sub). - What they can do (

scope).

Issuing JWTs

Who creates JWTs? You do! Actually, your server does. The following happens:

- User has to present credentials to get a token (password, api keys).

- Token payload is created, compacted and signed by a private key on yourserver.

- The client stores the tokens, and uses them to authenticate requests.

For creating JWTs in Node.js, I have published the nJwt library.

Verifying JWTs

Now that the client has a JWT, it can be used for authentication. When theclient needs to access a protected endpoint, it will supply the token. Yourserver then needs to check the signature and expiration time of the token.Because this doesn’t require any database lookups, you now have statelessauthentication… !!! Whoo!… ?

Yes, this saves trips to your database, and this is really exciting from aperformance standpoint. But.. what if I want to revoke the token immediately,so that it can’t be used anymore – even before the expiration time? Read on tosee how the access and refresh token scheme can help.

JWT + Access Tokens and Refresh Tokens = OAuth2?

Just to be clear: there is not a direct relationship between OAuth2 and JWT.OAuth2 is an authorization framework, that prescribes the need for tokens. Itleaves the token format undefined, but most people are using JWT.

Conversely, using JWTs does not require a full-blown OAuth2 implementation.

In other words, like “all great artists”, we’re going to steal a good part fromthe OAuth2 spec: the access token and refresh token paradigm.

The scheme works like this:

- On login, the client is given an access token and refresh token.

- The access token expires before refresh token.

- New access tokens are obtained with the refresh token.

- Access tokens are trusted by signature and expiration (stateless).

- Refresh tokens are checked for revocation (requires database of issued refreshtokens).

In other words: The scheme gives you time-based control over this trade-off:stateless trust vs. database lookup.

Some examples help to clarify the point:

- Super-Secure Banking Application. If you set the access token expirationto 1 minute, and the refresh token to 30 minutes: the user will be refreshingnew access tokens every minute (giving an attacker less than 1 minute to usea hijacked token) and the session will be force terminated after 30 minutes.

- Not-So-Sensitive Social/Mobile/Toy Application. In this situation youdon’t expose any personally identifiable information in your application, andyou want to use as few server-side resources as possible. You set the accesstoken expiration to 1 hour (or longer), and refresh token expiration to 4years (the lifetime of a smart phone, if you’re frugal).

Storing & Transmitting JWTs (in the Browser)

As we’ve seen, JWTs provide some cool features for our server architecture. Butthe client still needs to store these tokens in a secure location. For thebrowser, this means we have a struggle ahead of us.

The Trade-Offs and Concerns to Be Aware Of:

- Local Storage is not secure. It has edge cases with the Same Origin Policy,and it’s vulnerable to XSS attacks.

- Cookies ARE secure, with HttpOnly, Secure flags, and CSRF prevention.

- Using the

Authorizationto transmit the token is fun but not necessary. - Cross-domain requests are always hell.

My Recommended Trade-Offs:

- Store the tokens in cookies with

HttpOnly,Secureflags, and CSRFprotection. CSRF protection is easy to get right, XSS protection is easy toget wrong. - Don’t use the

Authorizationheader to send the token to the server, as thecookies handle the transmission for you, automatically. - Avoid cross-domain architectures if possible, to prevent the headache ofimplementing CORS responses on your server.

With this proposed cookie-based storage scheme for the tokens, your serverauthentication flow will look like this:

- Is there an access token cookie?

- No? Reject the request.

- Yes?

- Was it signed by me, and not expired?

- Yes? Allow the request.

- No? Try to get a new access token, using the refresh token.

- Did that work?

- Yes? Allow the request, send new access token on response as cookie.

- No? Reject the request, delete refresh token cookie.

- Did that work?

- Was it signed by me, and not expired?

AngularJS Patterns for Authentication

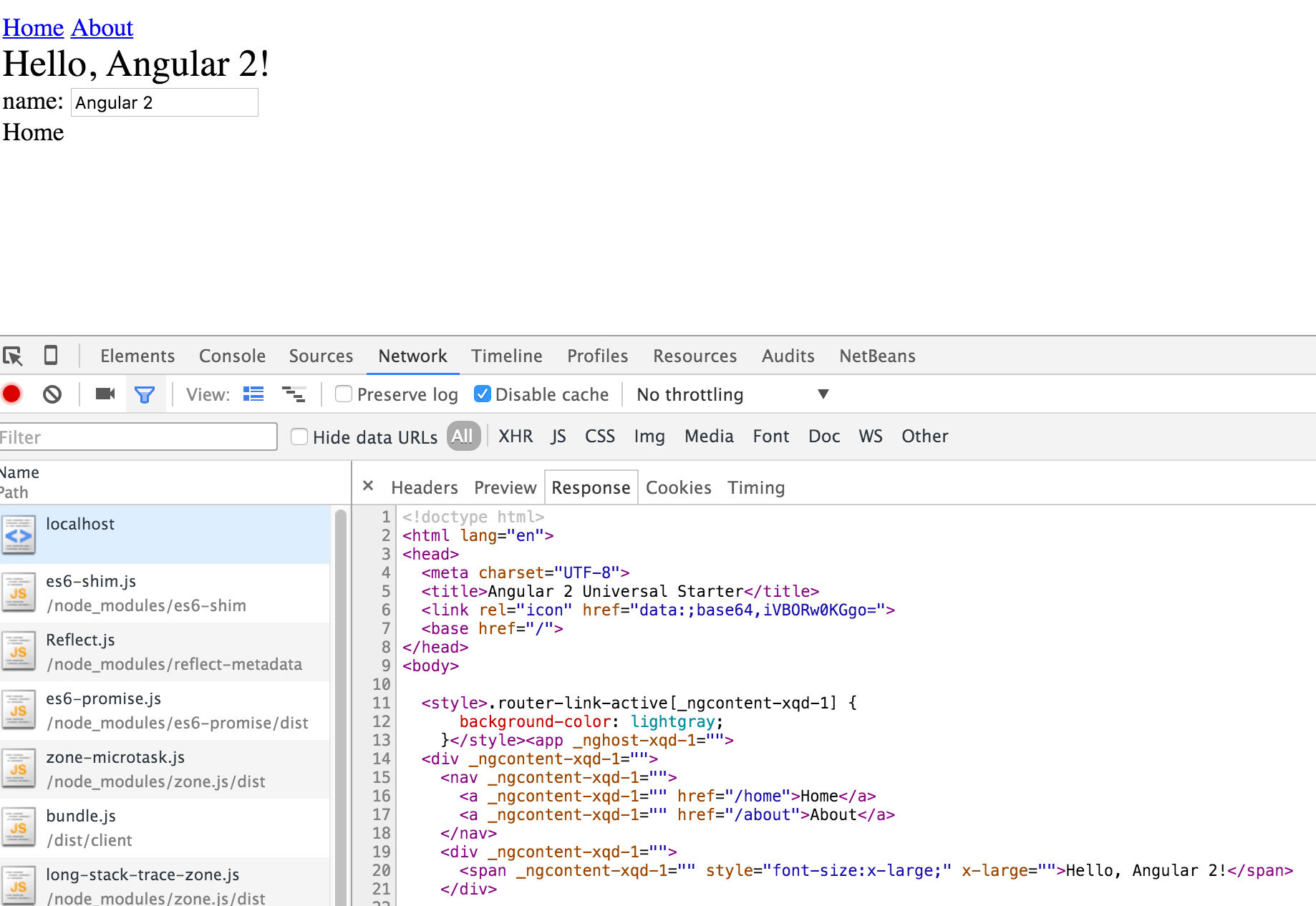

Because we are using cookies to store and transmit out tokens, we can focus onauthentication and authorization at a higher level. In this section we’ll coverthe main stories you need to implement, and some suggestions of how to do thisin an Angular (1.x) way.

How Do I Know If the User Is Logged In?

Because our cookies are hidden from the JavaScript environment, via the

HttpOnly flag, we can’t use the existence of that cookie to know if we arelogged in or not. We need to make a request of our server, to an endpoint thatrequires authentication, to know if we are logged in or not.As such, you should implement an authenticated

/me route which will return theuser information that your Angular application requires. If they are not loggedin, the endpoint should return 401 Unauthorized.Requesting that endpoint should be the very first thing that your applicationdoes. You can then share the result of that operation a few ways:

- With a Promise. Write an

$authservice, and have a method$auth.getUser(). This is just a simple wrapper around the$httpcall tothe/meendpoint. This should return a promise which returns the cachedresult of requesting the/meendpoint. A 401 response should cause thepromise to be rejected. - Maintain a user object on root scope. Create a property

$rootScope.user, and have your$authservice maintain it like so:nullmeans we are resolving user state by requesting/me.falsemeans we saw a 401, the user is not logged in.{}assign the user object if you got a logged-in response from the/meendpoint.

- Emit an event. Emit an

$authenticatedevent when the/meendpointreturns a logged in response, and emit the user data with this event.

Which of these options you implement is up to you, but I like to implement allof them. The promise is my favorite, because it allows you to add “loginrequired” configuration to your router states, using the

resolve features ofngRoute or uiRouter. For example:

angular.module(myapp) .config(function($stateProvider) { $stateProvider .state(home, { url: /, templateUrl: views/home.html, resolve: { user: function($auth) { return $auth.getUser(); } } }); }); |

If the

user promise is rejected, you can catch the $stateChangeError andredirect the user to the login page.How Do I Know If the User Can Access a View?

Because we aren’t storing any information in JavaScript-accessible cookies orlocal storage, we have to rely on the user data that comes back from the

/meroute. But with promises, this is very easy to achieve. Simply chain a promiseoff $auth.getUser() and make an assertion about the user data:

$stateProvider .state(home, { url: /admin, templateUrl: views/admin-console.html, resolve: { user: function($auth) { return $auth.getUser() .then(function(user){ return user.isAdmin === true; }) } }}); |

How Do I Know When Access Has Been Revoked?

Again, we don’t know when the cookies expire because we can’t touch them :)

As such, you have to rely on an API request to your server. Using an

$httpinterceptor, if you see a 401 response from any endpoint, other than the /meroute, emit a $unauthenticated event. Subscribe to this event, and redirectthe user to the login view.Recap

I hope this information has been useful! Go forth and secure your cookies :)As a quick recap:

- JWTs help with authentication and authorization architecture.

- They are NOT a “security” add-on. They don’t give more security be default.

- They’re more magical than an opaque session ID.

- Store JWTs securely!

If you’re interested in learning more about JWTs, check out my article on Token-Based Authentication for Single Page Apps and Tom Abbott’s article on how to Use JWT The Right Way for a great overview.